We love them. We hate them. And we really can’t live modern life without them. We’re talking algorithms.

At its most basic, an algorithm is a means of calculating a function via a sequence of actions (or instructions – just like a cooking recipe). I’ve got an algorithm that controls the high beam function on my car. It should dip the lights when it detects an oncoming vehicle, and go to high beam when the vehicle has passed. I really want it to work well – make decisions faster and better than I do. But it can’t. I keep letting it do its thing, in the hope that it learns from my interventions. And it doesn’t.

When algorithms go wrong – just like when recipes do – it’s not the fault of the computer (or cook book). It’s because the instructions are vague or plain wrong. Or – when the algorithm is assisted by machine learning – because the data set used to train it is incomplete or biased.

My car headlights are a trivial example. But below we highlight three stories where poorly designed or biased algorithms create life-changing consequences for humans. Right now, no one regulates algorithms. In the US the Life Sciences community is considering whether the FDA should licence algorithms used – for example – to design drug trials. But often, algorithms aren’t forensically examined and tested in the public domain until they go wrong – usually in a court of law.

We’ll be looking more deeply into ‘There’s a problem with Al…’ in later editions of The Clec. Hope you enjoy this taster.

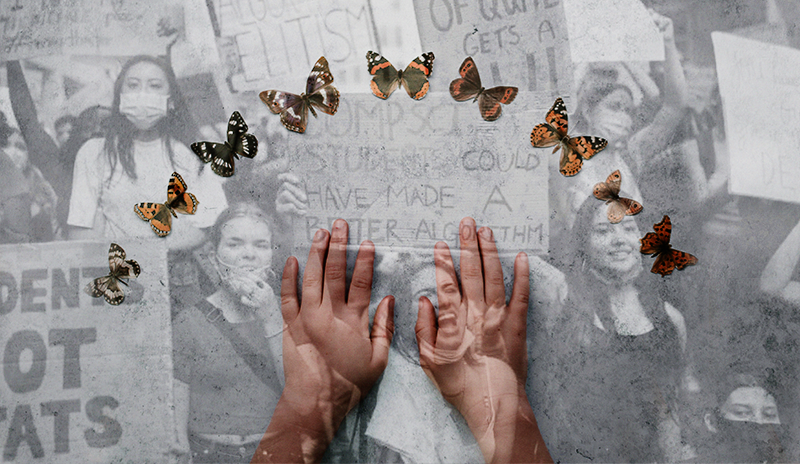

Exam regulators design an algorithm to replace cancelled examinations as a way to determine A-Level and Higher results (university entrance qualifications). The result is that private schools do rather better than expected, while children from poorer backgrounds do much worse. Here’s why.

A lot of us use facial recognition algorithms daily to unlock our mobile devices. Clearly, it’s got lots of benefits. But research from MIT Media Lab researcher Joy Buolamwini and colleagues has shown facial recognition systems to be more accurate if the subject is a white man and such systems are known to misidentify people of colour more often than white people. A big problem for unregulated systems increasingly deployed in law enforcement, for example.

Finally – highly pertinent for the next 3 months: the failure of political forecasting algorithms to predict a Trump win in 2016. A problem all of its own, it was fuelled by Facebook’s inability to algorithmically filter fake news in its feeds.

What do you think?